With the coronavirus crisis, suddenly there was uncertainty everywhere.

Political leaders told us they had to make decisions with incomplete knowledge. Epidemiologists cautioned they could not look into a crystal ball. The media busied itself trying to interpret the pandemic – infections, deaths – insisting meanwhile on how much we just don’t yet know.

Soon we began to hear other, more adamant voices.

An article by Silicon Valley growth hacker Aaron Ginn, viewed millions of times, made the bold statement: "Covid-19 hysteria is pushing aside our protections as individual citizens and permanently harming our free, tolerant, open civil society. Data is data. Facts are facts."

A graph went viral, claiming to show – falsely – that simply wearing face masks had made all the difference in slowing the spread of the pandemic.

Then, obviously, there were the less-than-nuanced political leaders. Like Brazil’s president Jair Bolsonaro: “This medicine here, hydroxychloroquine, is working in every place,” he claimed in late March.

Such bold claims are misleading. We’ve only known the virus for six months. Sure, in the beginning, a lot was still unclear. We didn’t know what the economic consequences would be. We didn’t know which drugs worked, nor the factors contributing to the spread. There are still gaps in our knowledge, but little by little we’re getting answers to important questions.

As statistician Casper Albers – in his turn, also quite firmly – tweeted: "Anyone who claims to have complete, concrete answers to complex issues should be mistrusted. Don’t invite them to your talk show, don’t vote for them, and reject their papers."

Many hopes have been expressed for the legacy of the coronavirus crisis. More solidarity. A new economic system. Less pollution. That kind of stuff.

I hope that we’ll still be able to recognise that we don’t know everything. The recognition of uncertainty does make a difference. Even if it’s about something other than a virus. Because the world is full of uncertainty. We don’t know where the housing market is going, whether a cancer drug will help a patient, or whether we’ll still like our partner in 10 years’ time.

Uncertainty brings doubt and nuance – though neither does well on television. Maybe it’s too complicated. Too boring. Too slow. And yet we would do well to embrace uncertainty.

Doubters are more likely to be right

Forecasting is as old as humanity, at least. Forecasts are made all the time. Insurers estimate when someone is going to die, security services estimate whether a war will break out, ordinary people – you and me – estimate whether it is wise to buy a house, marry that person or change jobs. Political scientist Philip Tetlock has researched how we do forecasting for 30 years.

The best forecasters are better than security officials with access to confidential information.

And the nice thing about forecasts is that we can live to see if they’re right. Not for vague predictions, like "one day there will be another recession", because in the end that prediction will always come true. But for concrete predictions like the weather forecast, you can check if it actually rained when the forecast said it would.

That’s what Tetlock did with experts’ predictions. For years, he asked questions about the Gulf War or the Japanese housing market. He collected data for two decades and published his conclusions in 2005 in his book Expert Political Judgment. What he found was astonishing: the experts’ predictions were on average no better than a chimpanzee throwing darts.

Experts, like chimpanzees, almost always miss, although sometimes they hit the bullseye by chance. In other words, those experts might as well have flipped a coin. On top of that, the more often someone was seen in the media, the worse the predictions turned out. The talking heads of this world are like chimpanzees with darts.

Surprisingly, or not, the media were excited by Tetlock’s research. But many reporters overlooked one thing: there was a small group of experts whose predictions proved more accurate than chimpanzees. They weren’t oracles, but they did a little less badly than the rest.

The fox and the hedgehog

One tendency unites the good forecasters: doubt. In Tetlock’s phrase, they were foxes not hedgehogs – terms from the Greek war poet Archilochus: "A fox knows many things, but a hedgehog knows one important thing."

Here’s the point, first made more than 2,600 years ago. Hedgehogs don’t doubt. They follow one big idea: Trump is useless, socialism is bad, the Covid-19 measures have to stop. From that idea, hedgehogs assemble unsubtle statements about the future. It makes nice TV, but bad predictions.

Foxes do doubt. About the world – which is full of uncertainty, and about themselves too. They’re guided not by certain dogmatic ideas about how the world should be, but by data that helps to understand how the world is. Foxes don’t do well on television, but they’re better at predicting.

Tetlock put together a team of volunteers – all of the fox type – to take part in a big forecasting competition organised by the US security services. The volunteers were asked questions such as: "Will a country withdraw from the Eurozone this year?" or "How many new cases of Ebola will be reported in the next six months?"

In the league’s first year of competition, Tetlock’s team did better than the other teams. Much better. And it stayed that way for the rest of the game. Four years, 500 questions and more than a million forecasts later, the results were clear: Tetlock had struck gold. The best contestants’ predictions were better than those of security officials with access to confidential information.

These "superforecasters" were not necessarily super-smart, nor did they have much knowledge of the topics that came up in the contest. Their jobs often had little to do with forecasting: a filmmaker, a ballroom dancer, a retired computer programmer. "It’s not really who they are. It is what they do," writes Tetlock in Superforecasting , his book co-written with journalist Dan Gardner.

Foxes know to handle information wisely, and thus how to make sense of the world. Whether that’s in the family app group, making better decisions for yourself, or hesitating before you believe a hedgehog on television. Fox-like characteristics emerge not only in the literature on forecasting but in books such as The 5 Elements of Effective Thinking and Thinking, Fast and Slow. Books about – as the titles make clear – thinking.

So how do those foxes think? And can we learn anything from them in this crisis? These five steps can help us understand.

(1) Know your own bias

Humans are not neutral information-processing systems. Some news scares us, other news makes us happy. We’re all too eager to believe one fact, while we prefer to discard another right away.

Take a recent study reported by a leading medical journal, The Lancet, which made headlines at the end of May. The topic: scientific research into hydroxychloroquine, a malaria medication that according to some (including, as we’ve seen, the president of Brazil) helps against Covid-19. The best known follower of the theory: Donald Trump.

The study did not prove that the drug did anything against coronavirus, but cited what appeared to be dangerous side effects. The risks would be higher for the Covid-19 patients who had been given the drug. Immediately, the World Health Organization (WHO) and national governments adjusted their guidelines. The study was music to the ears of people who were only too happy to see that Trump was wrong. But it turned out that something was wrong with the study.

The dataset (which ran until 21 April) included 73 Australian deaths, which must have been impossible, since at that date the country had registered only 67 fatalities. Surgisphere, the company behind the dataset, issued a statement to say it had accidentally counted patients in another Asian hospital as Australian. The findings would remain unchanged, the firm claimed.

What we believe has much to do with who is saying it

This was only the beginning. When the Guardian started to dig further, journalists found that Surgisphere had only six employees (now only one) on LinkedIn. None seemed to have a scientific profile – one was a full-time science fiction writer, another was an adult content model. Surgisphere’s contact page linked to a cryptocurrency website, while the company’s chief executive, a surgeon, appeared to have been mentioned in medical malpractice cases.

So the Guardian contacted five major Australian hospitals, which, in view of the 600-patient dataset, were claimed to have cooperated in the study. None had heard of Surgisphere, much less joined its research. In the UK, NHS Scotland, also mentioned on Surgisphere’s website, stated that none of its hospitals had cooperated with the company.

So it won’t come as a surprise that the Lancet decided to withdraw the article. Trump supporters welcomed the decision triumphantly: "THIS MISTAKE MAY HAVE COST LIVES," tweeted Rudy Giuliani, Trump’s lawyer. "TWO OF MY FRIENDS WERE SAVED BY HCQ. NUMEROUS STUDIES DEMONSTRATE IT’S EFFICACY. REPORT THEM!"

The example shows that we interpret information not only with our brains, but also with our gut instinct. What we hold to be "true" has just as much to do with who is saying it, and the group with which we identify ourselves. This trend is evident time and again in research into "motivated reasoning".

Take Dan Kahan’s work in the United States. The researcher found that people interpret information neutrally when it comes to something as simple or uncontested as skin cream. When the topic is controversial, such as gun legislation, they start to reason with the facts to fit their own point-of-view.

So when reading the news, it’s good to ask: how do I feel about this? For example, the message that hydroxychloroquine has dangerous side effects probably provokes in you an immediate reaction. This quick response occurs in what psychologist Daniel Kahneman calls your "system 1" – your fast, instinctive and emotional mode of thinking. Its priorities are in contrast to your "system 2", which is slower, more deliberate and logical.

So what is your instinctive reaction? Maybe you’re happy to see Trump proved wrong; or disappointed because you hoped it would be a good remedy; or angry because you already believe that science or journalism is biased. Nobody is free of prejudice – it’s human. But a fox is aware of it.

Awareness gives you a necessary modesty, the healthy self-doubt that prevents you from becoming overconfident. In this way, you can adjust by heeding your system 2 – not directly rejecting or believing a message, but for example by looking for sources that may not speak immediately to your existing beliefs.

(2) Look at the problem from all angles

A real fox is not guided by their own bias, but looks at facts. Take that big study of the medicine with the difficult name. A fox wouldn’t see it as the proof that the drug doesn’t work, even if they’re a Trump-hater. The fox would look further.

Because even if the Surgisphere data were correct, it would still be an observational study, not an experiment. To really understand whether a drug actually helps, you have to randomly assign it to people and then compare the two groups. This is known as a randomised controlled trial (RCT), often seen in the medical world as the "golden standard". But mid-pandemic, you may have to rely on sub-optimal methods simply because time is short.

So, a fox may wonder, what else do we know about hydroxychloroquine? The drug has been prescribed for a long time, so more research should be available on side-effects. Plus, the fact that an influential study has been withdrawn doesn’t prove that the drug does help against Covid-19, as Giuliani implied with his tweet.

Extreme reactions remind us of hedgehogs, when truth lies in the middle

Meanwhile the results of three studies on hydrocychloroquine have appeared, all based on RCTs and – hopefully – conducted properly. Their conclusion? The drug probably does not help sick patients, nor patients already exposed to the virus. But, as many scientists agree, it’s still worth finding out if the drug might help you before you come into contact with the virus.

So don’t rely on that one newspaper article, but gather as much information as possible. Even from sources that may not match your own conviction, or from a field that you don’t know much about. At the same time, be wary if someone else comes up with a fact that fits their position a little bit too well. And take your time – don’t rush to form an opinion right away.

Once you start looking at a problem from multiple angles, you’ll see it quickly becomes more complex. Look at that hydroxychloroquine discussion. "Miracle cure!" says one camp. "Too dangerous!" says the other. These reactions remind us of hedgehogs, when the truth lies somewhere in the middle: probably not so dangerous, probably not really useful, but maybe if you give it to people who haven’t been exposed yet... Who knows?

Foxes cope with such complexity. Whereas hedgehogs like to have a fixed narrative, foxes know the world is complicated. And changeable.

(3) Change your mind

"When the facts change, I change my mind. What do you do, sir?" Tetlock, writes in one of his earlier books, quoting John Maynard Keynes, one of the great economists of the twentieth century.

You don’t always get credit for changing your mind. You’re quickly labelled as unreliable, shifting. Strange. That would imply that at some point you should stop the clock, and never change your point-of-view. I’m glad I don’t still have to defend the opinions I had as an 18-year-old.

Tetlock found that superforecasters (the crème de la crème among foxes) are willing to adjust their positions when necessary. And they admit when they’ve made mistakes.

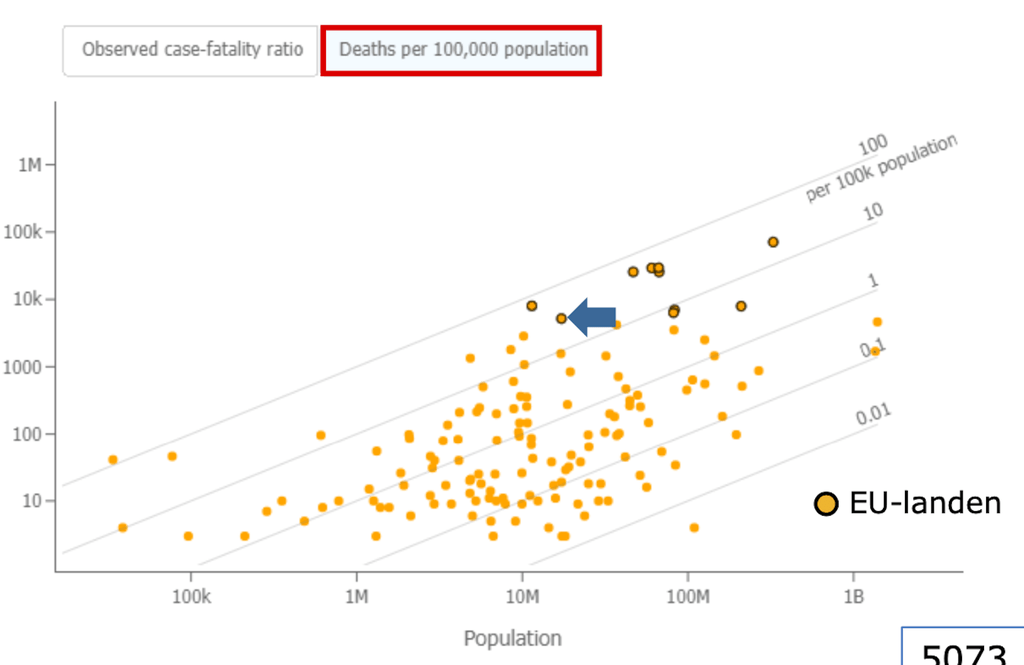

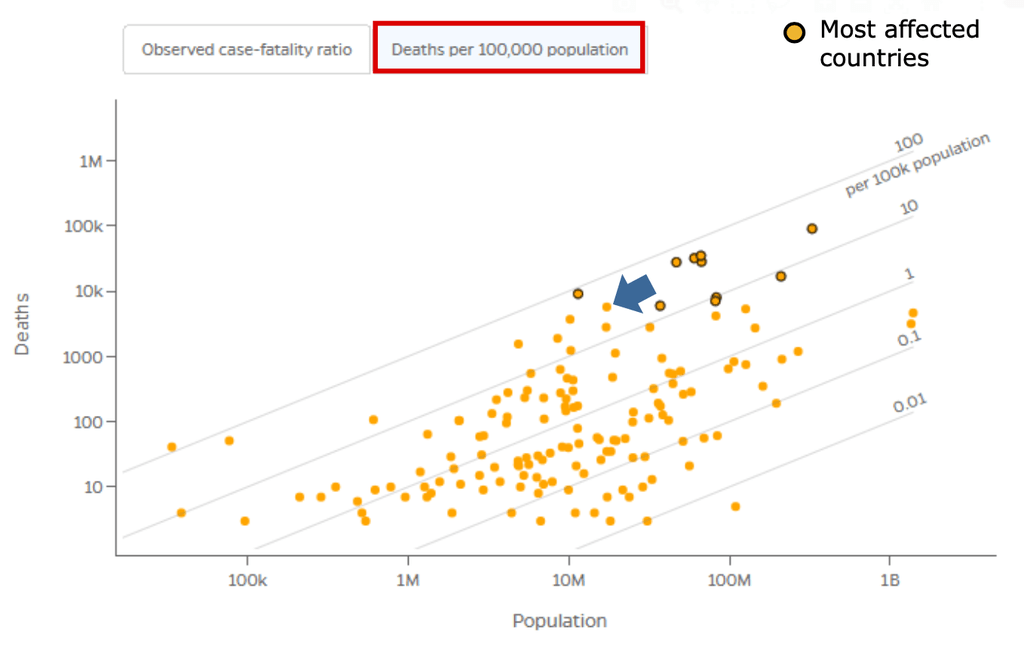

Look at Jaap van Dissel, a representative of the Dutch public health authority (RIVM), who made a mistake on May 7 during a technical briefing to parliament He showed a graph of mortality rates, in which every bullet stood for a country. The marked dots, he said, were the European countries: "You can actually see that the Netherlands is at the bottom of the European countries in this graph," said van Dissel.

It turned out that the marked dots were not EU countries, but those countries with the highest mortality rates. The Netherlands was among them.

Dissel corrected his error in the next briefing on 20 May. Anyone can make a mistake, especially under pressure. A real fox admits errors and tries to learn from them. That’s why it’s so important that people in power admit when things go wrong. Like when the Swedish epidemiologist Anders Tegnell, who admitted there was "potential for improvement" in his country’s strategy. Or New Zealand’s prime minister Jacinda Ardern who acknowledged an "unacceptable failure" when two women were not tested after visiting the UK. You only learn if you first admit what went wrong.

And John Maynard Keynes? When Tetlock wrote Superforecasting, he went looking for the source of that quote (having cited the phrase already in an earlier book). Only nobody seems to know where the quote came from. "And I have now confessed to the world," he writes: "Was that hard? Not really."

(4) Don’t think in certainties

So, you have your own biases. Problem can be complex and reality is changing all the time. But that’s not to say you should never make a statement about anything. Lethargy is lurking. Beware! Doubt is not the same as shrugging your shoulders.

People don’t like uncertainty. For a long time, writes Tetlock, humankind has tended to think in two modes: either it is, or it isn’t. That makes sense when you see how we used to live. "Either it is a lion [threatening you, SB] or it isn’t. Only when something undeniably falls between those two settings – only when we are compelled – do we turn our mental dial to maybe."

Yet "maybe" is often the right answer. Did the schools have to be closed at the beginning of the coronavirus crisis? That depends on whether children were contagious. And well, we weren’t sure. Maybe they were, maybe they weren’t.

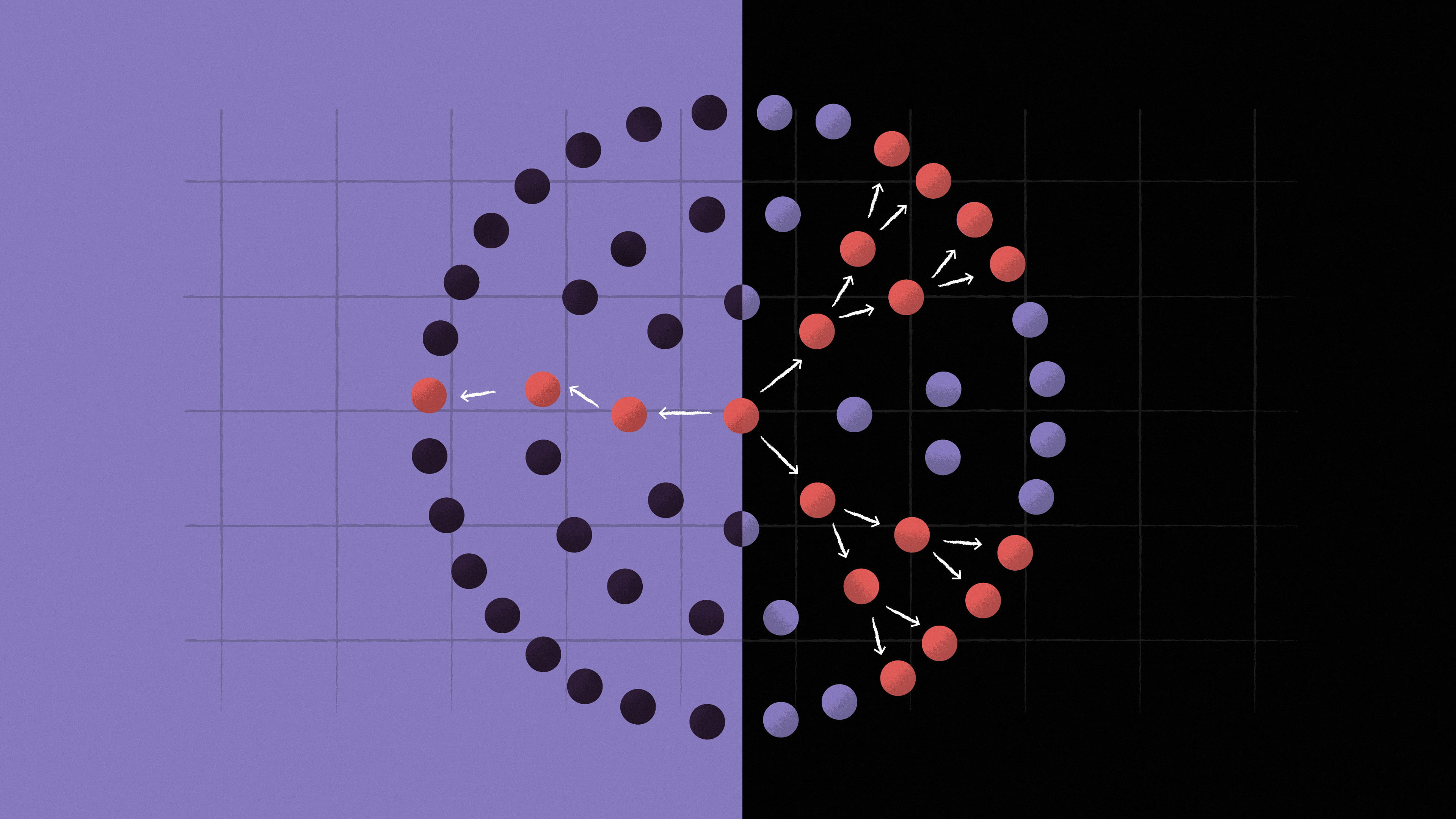

Foxes, Tetlock writes, think in more than three positions. Not just 0% chance, 100% chance, or 50-50. With weather forecasts, we’re used to hearing that there’s a 30% chance of rain tomorrow. If rain did fall on 30% of the days with such an expectation, then the weather people would have been right.

How nice would it be if experts in the media also gave us opportunities to test their claims when they make their statements? That would require them to be specific enough to be tested. Whereas, in addition to the fact that they rarely talk in terms of probabilities, experts often shout about such vague things that they can be impossible to disprove ("Recession is coming!").

You may not be so quick to calculate probabilities yourself, but the lesson is still useful: the world isn’t black and white. In most scenarios – outside the pandemic – it’s not 100% certain that something will or will not happen. A scale of probability ranges from 0% probability (definitely doesn’t happen) to 100% probability (definitely does happen).

For example, national governments have to consider the risks of re-opening schools. Some decided to do so. Was that because there was a 0% chance that children were contagious? No. They didn’t give a number, but they thought the chance was small enough based on the evidence available.

Science is built on doubt. You’ll rarely hear from a scientist that something is 100% true, but by working towards a scientific consensus on something that initially we didn’t know anything about (something with a 50-50 probability, for example), we can reach a probability that edges higher or lower.

This is important for decision-making. Because despite all that doubt, a choice has to be made. What do we do with the schools? By now, more research has been done into the contagiousness of children. Each study brings you one step closer to the truth, although the future is still uncertain.

And as far as those decisions are concerned, scientific knowledge also has its limits. Take that much-read article by Silicon Valley technologist Ginn. It’s full of numbers. Epidemiologist Carl Bergstrom persuasively debunked the piece, after which it was taken down. So much for "data is data, facts are facts". Actually data is just data. But facts are facts.

Even if we ignore his mistakes, Ginn deploys facts to veil what are only opinions. His warning that current measures are "permanently harming our free, tolerant, open civil society" is not a factual statement. Politics is politics. Science can inform, but the final choice is a political one.

(5) Find people who can think like a fox

Maybe you’re thinking that edging towards probability, finding multiple sources, all this sounds like a lot of work. Right. To really develop a sound prediction, you have to keep informing yourself, accept that you can change your mind and continuously think about how much uncertainty there is. That’s pretty exhausting.

Listen to messages that don’t match your convictions

If you don’t have time for this, look for other people who do. Journalists, scientists, friends. I learned a lot from Ed Yong, a science journalist for the Atlantic. He always acknowledges the uncertainty in science and quotes investigators without cutting out the nuance. I read Science Magazine and FiveThirtyEight (which has a fox logo). These help me to feel that I’m informed in a balanced way, not pushed in a certain direction.

Podcasts are good too, a medium in which generally there is more room for nuance. Where an article often has to be succinct, longer audio interviews can meander, often allowing for a better picture of uncertainties. Take, for example, The Ezra Klein Show and Making Sense with Sam Harris. As well as great interviews, these podcasts are associated with different sides of the political spectrum. You get to hear messages that don’t match your convictions.

But be careful. For a long time, I was a fan of epidemiologist John Ioannidis, whom I also quote in my book. He became famous with his article "Why Most Published Research Findings Are False", which has been fundamental to the field of "meta-science" (research into research). However, since the crisis started, he has been doing a rather sloppy job.

In one study, Ioannidis collected participants for a study via Facebook Ads – not exactly a method to produces representative samples – and the study turned out to be partly funded by an airline tycoon with an interest in showing that the pandemic was not so bad. That’s disappointing for someone who made it his mission to improve the quality of science.

Then of course, I also have to be careful not to let my own biases speak. Because this study was soon picked up by conservatives in the United States, who are not exactly my political favourites. Please convince me if you think I’m wrong, because I’m not entirely objective either. Nobody is.

What I’ve learned from foxes is that objectivity is something you can pursue. By keeping an open mind. We’ll have to learn things we’re not used to and that sometimes feel uncomfortable – doubting, changing our minds, looking outside our bubble – but it makes a big difference.

At least, I think so.

Aspects of this article have been covered in my previous newsletters on superforecasting and hydroxychloroquine.

Want to stay up to date?

Follow my weekly newsletter to receive notes, thoughts, or questions on the topic of Numeracy and AI.

Want to stay up to date?

Follow my weekly newsletter to receive notes, thoughts, or questions on the topic of Numeracy and AI.

Dig deeper

This is the most important number for ending lockdowns. How is it calculated?

As countries around the world ease lockdown measures, they’ll be keeping a very close eye on the R number. So what is this number? And how is it determined?

This is the most important number for ending lockdowns. How is it calculated?

As countries around the world ease lockdown measures, they’ll be keeping a very close eye on the R number. So what is this number? And how is it determined?