Does CO2 cause climate change?

Do vaccinations help stop measles?

Is immigration bringing problems to a country?

A lot of public debates are about questions like these: questions about cause and effect. At a very generalised level, you can often break the debate down into two camps: those who believe that there is a connection and those who don’t.

Climate change deniers don’t believe that human-generated CO2 emissions are what is causing climate change, while those that acknowledge climate change do.

Anti-vaxxers don’t believe that vaccinations help children stay healthy, while “pro-vaxxers” do.

“Do-gooders” don’t think that immigration brings many social and economic problems, while “xenophobes” do.

Cause-and-effect questions produce heated debates because they are about concrete actions. If you know how something is caused, then you can do something about it – reduce CO2 emissions, launch vaccination programmes, enforce stricter immigration policies.

But if that connection is not there, then such actions will not help, and might even make things worse. Money would be wasted on pointless things, children would get sicker, a shortage of labour would bring down the economy.

Both camps brandish their own science to prove their points. A statistic here gets put up against a graph over there, and a study from one side dismantles one from the other.

So who’s right? To answer that question, we need to dip our toes in the waters of the science of causality – cause and effect. What can scientists say about the thorny questions that we are so concerned with? And what can’t they say?

An age-old quest

In the sixth century BC, gorging on meat and wine was de rigueur for most people in the kingdom of Babylon. But not Daniel. This young Jewish boy and three of his friends had been taken prisoner and pressed into service in the court of King Nebuchadnezzar.

They had to learn Babylonian language and culture, which included the Babylonian habit of consuming copious amounts of wine and meat. But Daniel and his friends refused to partake, being that kosher food was not on the menu in the king’s court.

The overseer of King Nebuchadnezzar’s court, Ashpenaz, had a problem with that, because he was sure that unless they ate like proper Babylonians, the Jewish captives would be miserable. And if the king saw “your frowning faces, different from the other children your age, it will cost me my head,” Ashpenaz said.

It’s difficult to investigate cause and effect – but not impossible

Daniel had an idea, though. He asked the overseer to feed the four of them a diet of vegetables for 10 days, and keep the other servants on their meat and wine. And after the 10 days, “as thou seest, deal with thy servants”: look at the results and decide what to do. So that’s what they did.

And the experiment was a success. Nebuchadnezzar was impressed by Daniel and his friends, finding them “10 times better than all the magicians and astrologers that were in his realm”.

This biblical experiment shows that the human drive to answer questions of causality goes back a long way. We’ve been trying to understand cause and effect for millennia. What diet will make me healthy? What’s the best strategy to catch a mammoth? What is the force that makes the apple fall out of the tree?

Judea Pearl, computer scientist and author of The Book of Why, contends that, in fact, our thinking about cause and effect is one of the reasons that Homo sapiens became so successful. Because once you make the connection between cause and effect, you have the ability to make things happen in the world. You can grow crops, invent a steam engine, develop medicines.

But Daniel’s experiment teaches us something else, too: how hard it is to investigate cause and effect.

The problem of correlation and causation

Daniel’s idea seemed to do the job. By knowing what diet (cause) made the group healthy (effect), Daniel showed that he could go on eating what he wanted to eat and the servants could serve their master Nebuchadnezzar even better.

The only trouble is: his idea is based on a logical fallacy. The fact that the vegetarian group was healthier does not prove that they were healthier because they ate a vegetarian diet. In the lingo that statisticians use, Daniel was confusing correlation with causation.

Where did he go wrong? Daniel assumed that the two groups, the meat-eating group and the vegetarian group, were the same. That diet was their only difference. At first glance, that would seem to be correct, because Nebuchadnezzar only wanted young men “in whom was no blemish, but well favoured, and skilful in all wisdom, and cunning in knowledge, and understanding science”.

But Daniel and his Jewish friends might also have differed from the others in a thousand other ways. Perhaps they had better genes, stayed more active, or may have simply been healthier than the others at the start.

The fact that you observe two things, a given diet and better health, among the same group (correlation) does not allow you to establish causation – that the one causes the other.

There’s another common variant of this error: the fact that two things happen at the same time (correlation) does not mean that the one caused the other (causation). For example, when you look at the years between 1975 and 1995, you see that the number of brain tumours in the United States increased sharply in the mid-1980s.

When one group of researchers went looking for the cause, their work brought them to focus on a potential culprit: artificial sweetener. Just before the increase in brain tumours, US Americans had begun moving away from putting sugar in their coffee, and were switching to artificial sweetener on a massive scale. That had to have something to do with it, right?

Well, you might think so, until you realise that there were a lot of other things happening in the mid-1980s. Rick Astley released “Never Gonna Give You Up”; the first version of Microsoft Word was released; for that matter, I myself was released at that time. It was 1986 when me and my tufty black hair made our debut in the world, and like Microsoft Word, I’ve been here ever since. Who’s to say that I wasn’t the cause?

To this day, there’s never been any connection demonstrated between artificial sweetener and brain tumours. But here’s a more logical explanation for the spike in the graph. It was around 1985 that the MRI scanner started to become a common piece of equipment in US hospitals; many got their first MRI machine, so, unsurprisingly, around then hospitals started to find more tumours.

“Correlation does not equal causation.” It’s a go-to argument for sceptics in all kinds of debates on all kinds of topics. The tobacco industry, for example, long asserted that smokers were really inherently different people than non-smokers (in the same way that Daniel and his friends were different from the meat-eating servants). And that because of this, the simultaneous rise in the number of smokers and increase in cases of lung cancer didn’t mean that smoking was bad for you (just like the artificial sweetener wasn’t bad per se).

But we now know, of course, that smoking can cause lung cancer. Yes, it’s difficult to investigate cause and effect – but not impossible. These are the four methods that scientists use to unravel cause and effect.

Do a controlled experiment

12 April 1955.

All across the United States, radios are on in offices, televisions are set up in schools, cinemas are packed full of people waiting for the newsreel to start rolling. Everyone is awaiting the announcement of the results of the largest medical experiment ever conducted. Finally, they will learn whether there might be a chance of eradicating the scourge of polio.

Every summer, it was the same: thousands of US children got sick. Young patients filled the hospitals, shuffling around like war veterans, hobbling through the halls on crutches and canes. Or worse: the more severe cases were locked up in the giant metal chamber known as the “iron lung”, the mechanical last hope of keeping the patient’s breathing going. Many didn’t survive it.

And no one knew where polio came from. Was it cats? Imported bananas? Until they knew, it was just a matter of trying everything to keep the disease away: swimming pools were closed; birthday parties all but banned; some thought the answer was hanging bags of healing herbs around the house. People would try anything. Nothing worked.

But there was hope. In Pennsylvania, in the city of Pittsburgh, there was a virologist working on a vaccine – Jonas Salk. He set up a trial involving more than 1.3 million children. For 400,000 of them, he randomly selected one group to get the vaccine and another, the control group, who would be administered a placebo.

The initial results were positive. Salk was so optimistic that he immediately vaccinated himself and his own children. And then came the big moment, in 1955, when the results of the total experiment were being announced.

But the news had leaked even before Salk and his team announced them on 12 April 1955. “The vaccine works,” NBC presenter Dave Garroway announced on the Today show. “It is safe, effective and potent.” The church bells rang, schoolchildren ran outside, people across the nation wept for relief.

Salk’s experiment is an example of the first method of investigating cause and effect: the controlled experiment. The assignment of who goes into which group (vaccine or placebo) at random is critical to this type of experiment.

As we have already seen with Daniel’s case, an experiment will run into problems if two groups differ from each other in more than just the method of treatment. For example, if only the parents who, let’s say, have children with other health problems get their children vaccinated (because they’re already worried about their children’s health), this is going to have an impact on your results.

That’s why Salk did what he did, which remains standard practice in medical experiments to this day: he decided who went into which group completely at random. That prevents selection bias, or the tendency to put a certain “type” of person into the treatment group.

The important thing is that there has to be only one difference between the two groups, and that is whether they are getting the treatment or not. That way, when you compare the two groups you can really conclude that the vaccine (cause) reduces the chance of contracting polio (effect).

Some 25 years later, in 1979, the United States was declared polio-free. All thanks to Salk’s vaccine (and a second vaccine that was subsequently developed by Albert Sabin).

Since then, vaccination programs launched around the world have effectively eliminated polio. In 1988, there were still some 350,000 cases; by 2019, that number had been reduced to just 176.

But we’re not there yet

A controlled experiment is nice, but it’s not always an option in practice. Doing such an experiment right is generally very expensive. You’ll need a research team, you might have to pay the participants, and you’re often dealing with expensive interventions or medicines. It’s much cheaper to throw an existing dataset into a statistical software package.

Another problem that can arise in experiments is that try as you might to keep it out, some self-selection often sneaks in. Your treatment group and control group might look like each other, but they may not look like the rest of the world. That’s what psychologist Joseph Henrich and his co-authors argued when they claimed that the samples in psychology are actually “WEIRD”: Western, Educated, Industrialised, Rich and Democratic.

All too often, research findings are generalised to “people”, even though WEIRD people can differ strongly from other groups. Take the Müller-Lyer illusion, where the subject has to pick which line is longer: A or B (see the figure at left).

Most of us will say that line A is clearly longer. In reality, these lines are the same length, as the figure at the right reveals. This is a textbook example of an optical illusion, but follow-up research among small non-WEIRD communities reveals that not everyone is susceptible to it. For example, when they showed this figure to a tribe in the Kalahari desert, they weren’t fooled: they saw no difference between the two lines.

The third reason that controlled experiments are not a panacea: some experiments are unethical. You can’t force-feed people organic food, for example, any more than you could force people to drink alcohol, just to see how much is good or bad for you.

Psychological and medical experiments need to be discussed and approved by an ethics commission. The infamous Stanford Prison Experiment, in which psychologist Philip Zimbardo placed 24 male students in an artificial “prison” environment, would never pass muster today.

Finally, lest we forget, there are many controlled experiments that are simply impossible to carry out. We don’t have a stack full of planets available for us to randomly add CO2 into their atmosphere. Or a stack of Europes for us to run various different levels of immigration policy in.

Fortunately, there are other ways to investigate a causal connection.

Exclude all other possible explanations

In August 1960, a young Dutch boy, Robbie Ouwerkerk (11) suffered an outbreak of severe acne. And he wasn’t the only one in the country with his condition. In a very short space of time, there were 100,000 people in the country suffering from rashes, itching and nausea. Some also had a high fever.

Little Robbie tried very hard to figure out where his condition was coming from. He noticed that his brother and father weren’t suffering from it at all. They always ate butter, he realised, while Robbie himself always had margarine. Was that the key?

Robbie told his mother, and she passed it on to the local health services. Immediately, two specialists, epidemiologist Joop Huisman and dermatologist Henk Doeglas set out across the country, looking for patients with similar symptoms. They found 40, and they asked all of them the same question: “Have you eaten Planta margarine?”

By that time there were indications that the cause might be in the new formula of Planta margarine, produced by Unilever. And indeed, it turned out that every person suffering from these symptoms had consumed this new product. The product contained a newly added emulsifier, ME-18, an agent that was intended to keep the margarine from splattering when heated in a pan. As it turned out, this was the culprit.

But there were still some facts that seemed to challenge this theory. The condition had, it seemed, been known in the Netherlands for some time, even before Unilever had marketed its new margarine. But that could be explained: the ingredients in question had been added to Planta for a short period of time in the past. And a previous outbreak in Germany of Die Bläschenkrankheit, the vesicle disease, in 1958, also proved to be linked to ME-18.

Unilever stopped production of Planta immediately and recalled all of it that was already in the stores. But despite this, two weeks later the condition reappeared. Ultimately, however, this proved to only corroborate the theory: traces of the emulsifier ME-18 had remained in the processing equipment, and later turned up in other margarines.

Ultimately, Planta didn’t survive the scandal. And it also left its mark on Robbie, who forever after was known as “Planta Robbie”.

Come up with a good theory

Robbie’s story shows that thinking things through will get you a long way. He came up with a theory that was then tested by doctors. Every fact has to fit the theory. People who had not eaten Planta didn’t suffer from these symptoms; the people who did develop them a few weeks later could also be connected to ME-18; and perhaps the most important piece of evidence: when Planta was taken off the market, the condition disappeared.

Compare this “acne epidemic” with climate change. We have seen that the average temperature on earth has been going up since 1880 – what could be the cause? A great many possible explanations have been proposed. Could it be, for example, from the sun, which we know goes through cycles of hotter and cooler periods? Could it be volcanic CO2 emissions? Or shifts in Earth’s rotation on its axis?

All of these things are factors that influence the climate. But none of them can explain why Earth’s temperature has risen so sharply over the last 150 years. Looking at the period over which the temperature has increased, you see that (1) the level of solar radiation has not increased, (2) the level of volcanic activity has remained more or less constant, and (3) the Earth’s orbit offers no explanation for the increase.

The only remaining possibility is the increase of CO2 and other greenhouse gases. Here, unlike in the Planta scandal, the connection between cause and effect is clear. There is a solid theory as to why one leads to the other.

Earth reflects solar energy as infrared radiation; greenhouse gases absorb this radiation and send it in all directions, including back to the Earth’s surface. Voilà: an explanation for the increased temperatures.

Science is never 100% certain. We never know; the day may come when someone produces such strong counter-evidence that we need to trash our old theories. But right now, that seems extremely improbable.

“Believe me, there simply is no hidden explanation,” climate scientist Geert Jan van Oldenborgh recently told Dutch national newspaper De Volkskrant. “You can count on the fact that we have all been frantically searching for exactly that hole in the science. If you can find it, you can be sure you’ll be the cover story in Nature.”

Find a natural experiment

There are no natural laws in the social sciences. If a grand unifying theory exists that explains human behaviour, then it is so complicated that we don’t understand it yet. That’s why economists and psychologists can’t make precise predictions.

But that doesn’t mean that these fields should just stop looking at causation. Compare it to knowing that some medical treatments increase the chances a patient will recover but a guarantee of 100% recovery is impossible. Sometimes you can only make a prediction about the impact of a given policy.

Integration of immigrants is one good example. Some say that the best approach is to grant new immigrants citizenship very quickly, to incentivise them to integrate into society. But others say that citizenship should be the reward for proven good integration. One who took the latter position is former Dutch minister of internal affairs Piet Hein Donner, who said: “Dutch citizenship is the crown on participation and integration in society.”

So, you might well ask the question: does citizenship promote integration? But how do you answer it? You can’t just compare people who do and do not have a Dutch passport, because passports are not something that anyone just gets, so there are differences between the immigrants who do have one and those who do not. A controlled experiment would most likely run up against significant criticisms: giving out passports at random is unfair, you are playing dice with people’s futures.

But economist Jens Hainmueller and his colleagues found a context in which you could approach this question scientifically. They studied Switzerland, where the local authorities have democratised the decision of whether an immigrant should be granted Swiss citizenship. In each municipality, when an immigrant applies for citizenship the people with voting rights are sent an abbreviated CV of the person, and they get to vote “yes” or “no”.

It’s not scientifically sound to compare the “winners” with the “losers”, because there are presumably reasons that one immigrant gets enough votes and another does not. But Hainmueller and his colleagues took a different approach: they looked at the people who had just made it (intervention group), and those who had just fallen short (control group).

The idea behind this method was that it might be a question of just getting lucky. It’s the same as in a controlled experiment, they thought; it’s really just random whether you end up in the treatment group or the control group. A small difference in votes is a matter of coincidence. These are groups that you can compare – at least, the ones that stay in Switzerland – if you want to know the impact of getting Swiss nationality. In this case, the researchers looked at whether getting Swiss nationality (cause) resulted in better integration (effect).

They observed that people with Swiss citizenship integrated better. More of them had plans to stay in Switzerland, they experienced less discrimination, and they were more likely to read a Swiss newspaper. In short, citizenship seemed to be an additional incentive to integrate.

This analysis is an example of a “natural experiment”. It is a situation that just happens to make it possible to test a causal relationship because there is an inherent element of random chance.

There are a number of different kinds of natural experiments, and the concept of looking at borderline cases is one of them. You can also see this in the research into the impact of bombing runs in the Vietnam War, where a rounding in the algorithm determined whether a given location was or was not bombed; or, similarly, the impact of pension reforms that applied only to people who were born after 1950.

Researchers cannot create a natural experiment; they can only find them. And that’s not easy. As one statistics textbook puts it, “Finding a good natural experiment is like finding a gold nugget in a river.”

The role of science is limited

Climate change, vaccinations, immigration – science has a lot to add to the discussion on these weighty issues. But we can’t expect science to answer all our questions.

The Swiss example is interesting, but the specifics of – say – the Dutch context might be quite different. And how well did the authors measure “integration”, really? One frequently recurring mantra in science (“more research is needed”) seems to be very much appropriate in many places, although not everywhere. On both climate change and vaccination, for example, we have a solid theory that has been confirmed by a preponderance of reliable, reproducible research.

That said, it should be kept in mind that the role of science is always limited. Many discussions of cause and effect are not as scientific as they are political. When it comes down to it, they are about distrust of experts, Big Pharma, or elites; fear of losing jobs or national culture; moral outrage about immigration policy.

Science has nothing to say about what is good or bad. We have to decide that for ourselves.

Correction: the original text mentioned Dave Caraway. As a member kindly pointed out, this should have been Dave Garroway.

I would like to express my gratitude to everyone who provided me with examples of research into cause and effect, and in particular to Fransje Sjenitzer for the Planta example, Lex Thijssen for the Swiss citizenship and Vietnam War examples, and @MiLifeStatus who also referred me to the Switzerland case. The passage about WEIRD is taken from my book The Number Bias.

This article first appeared on De Correspondent. It was translated from the Dutch by Kyle Wohlmut.

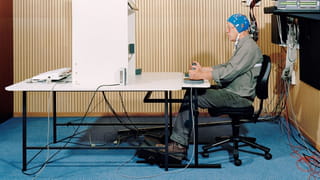

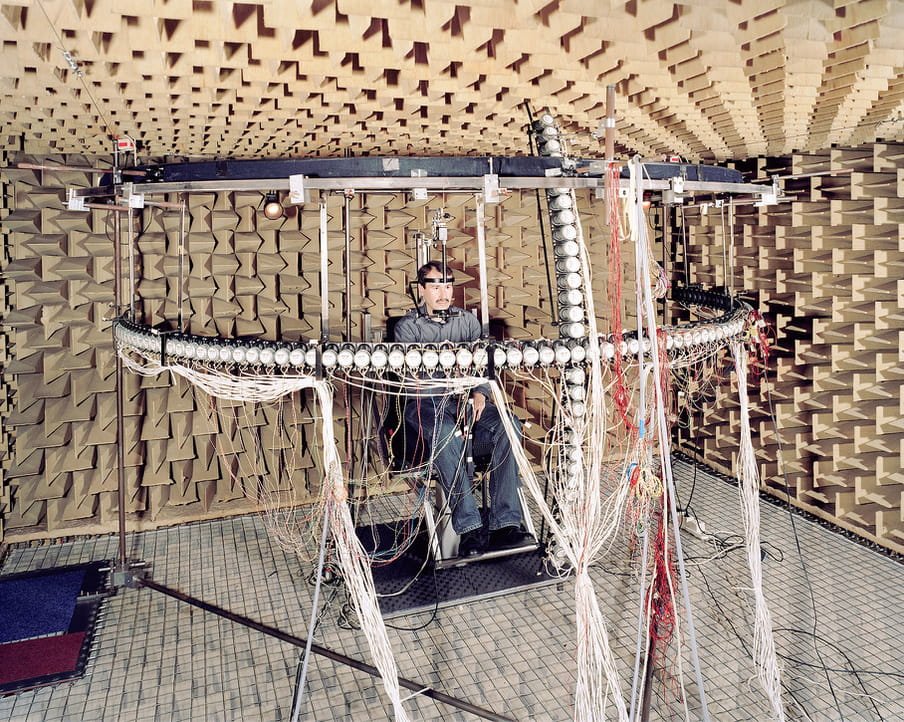

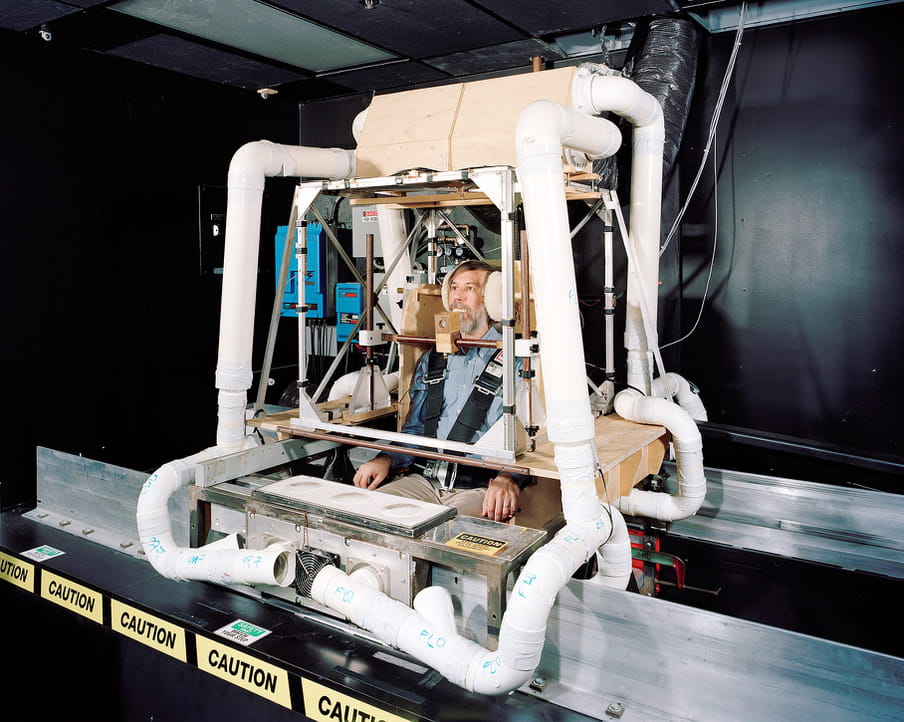

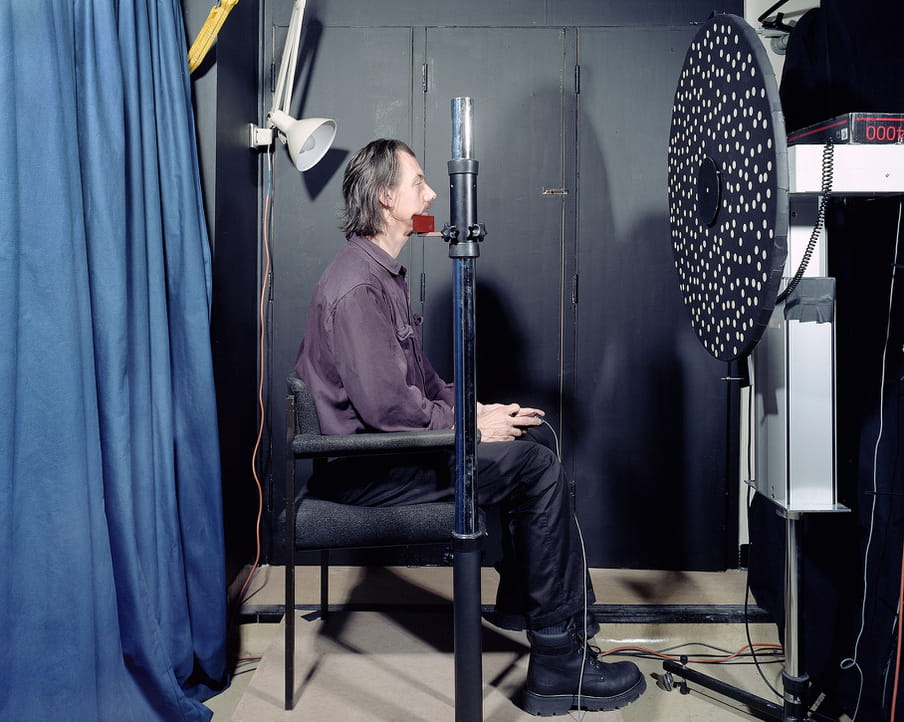

Ways of Knowing

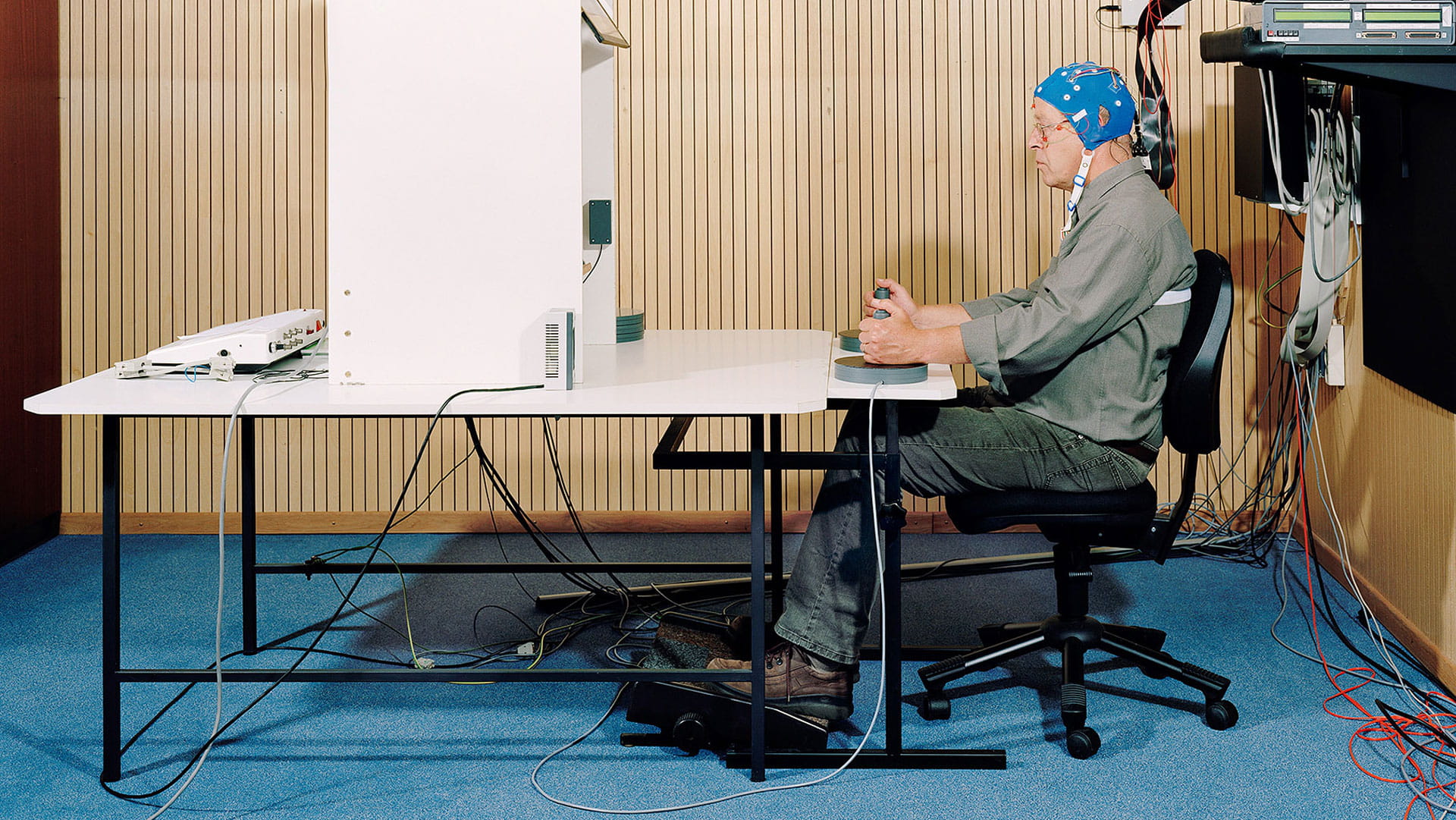

The world of science is an endless search for answers. Scientific truths are true until they are set aside by new evidence. In the series ‘Ways of Knowing’, German photographer Daniel Stier reveals the world behind the evidence. The images used in this article are taken from the first part of this series and show experiments with specially designed machines. Stier zooms in on the individual in the act of submission to giant, self-built machinery. The resulting photographs are absurd and disturbing spectacles that somehow also depict a curious, inherent beauty.

Ways of Knowing

The world of science is an endless search for answers. Scientific truths are true until they are set aside by new evidence. In the series ‘Ways of Knowing’, German photographer Daniel Stier reveals the world behind the evidence. The images used in this article are taken from the first part of this series and show experiments with specially designed machines. Stier zooms in on the individual in the act of submission to giant, self-built machinery. The resulting photographs are absurd and disturbing spectacles that somehow also depict a curious, inherent beauty.

Dig deeper

In praise of doubt: we should be less sure about everything. Right?

Talking heads spout forth online and in the media, each new opinion seemingly more assertive than the last. But the world is full of uncertainty. If you want to make better decisions, dare to doubt, embrace unpredictability and learn to think like a fox.

In praise of doubt: we should be less sure about everything. Right?

Talking heads spout forth online and in the media, each new opinion seemingly more assertive than the last. But the world is full of uncertainty. If you want to make better decisions, dare to doubt, embrace unpredictability and learn to think like a fox.